Parallelization

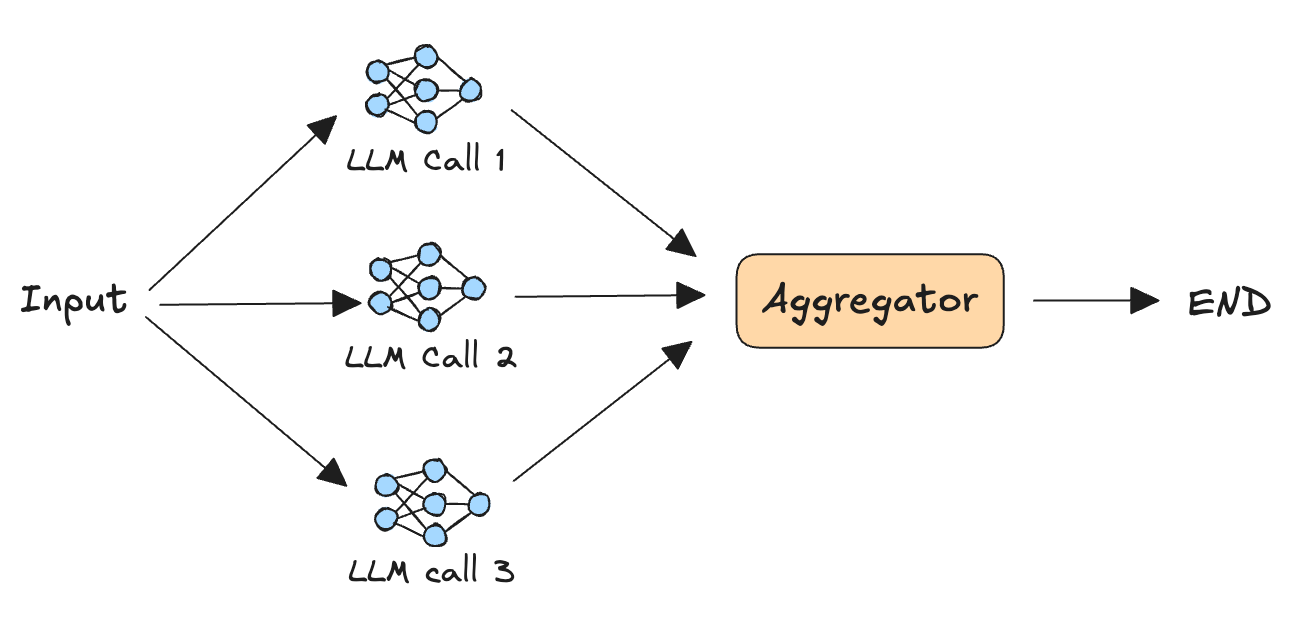

The Parallelization design pattern involves having multiple Large Language Models (LLMs) work simultaneously on a single complex task or its independent subtasks. The core argument is that by distributing the workload, this pattern can significantly reduce latency and improve the quality and reliability of the final output. The outputs from the individual LLMs are then aggregated by a final LLM or a custom logic to form a comprehensive and superior final answer. This approach is particularly effective for tasks that can be broken down into discrete parts, or where multiple perspectives are beneficial.

Key Terms & Definitions

- Parallelization: A design pattern where multiple LLMs process a task concurrently to improve efficiency and accuracy.

- Aggregation: The process of combining the outputs from multiple parallel-running LLMs into a single, cohesive final result. This can be done by another LLM or a custom algorithm.

- Latency: The delay between a request being sent and a response being received. Parallelization aims to reduce this by performing tasks simultaneously.

- LLM-as-a-Judge: A specific use case of parallelization where multiple LLMs are used to evaluate different aspects of a response, providing a more robust and objective assessment than a single model.

- Ensemble Learning: A related concept in machine learning where multiple models are combined to produce a better output than any single model could produce on its own. The Parallelization pattern for LLMs is a form of ensemble learning.

When to use

This pattern is useful for handling tasks that can be split into independent subtasks. Running these subtasks in parallel offers two key benefits: reduced overall latency and improved focus, as each LLM can concentrate on solving specific parts of the problem. In some cases, having multiple LLMs attempt the same task and comparing or combining their results can also improve reliability.

Use cases

- LLM-as-a-judge: A common technique for evaluating LLM responses is using strong LLMs themselves. Using the parallelization design pattern, you can have multiple LLMs or a single LLM with different prompts simultaneously evaluating different aspects of the response.

- Code generation: Given a prompt, use different LLMs to generate code. Then, execute each of the code snippets, use an LLM to analyze their efficiency, and return the most efficient

Questions for Self-Assessment

- How does the Parallelization design pattern address the limitations of a single, powerful LLM? What specific problems is it designed to solve?

- The text mentions two key benefits: reduced latency and improved focus. Explain how these benefits are achieved in the "Code generation" example. What would happen if this were done sequentially?

- Consider the "LLM-as-a-Judge" use case. What are the potential pitfalls of relying on a single LLM to judge a response? How does parallelizing this task with multiple LLMs or prompts make the evaluation more reliable?

- Beyond the use cases mentioned, can you think of another complex task that could be made more efficient or accurate using the Parallelization design pattern? What would be the independent subtasks?

Addressing the Limitations of a Single LLM

The Parallelization design pattern addresses several key limitations of a single, powerful LLM. While a single, large model is capable, it can be slow and prone to "cognitive" errors when dealing with highly complex or multi-faceted tasks. This pattern is designed to solve two specific problems: slowness (latency) and reliability. Instead of waiting for one model to process a huge task, it splits the work, allowing for concurrent processing and cross-validation, which leads to faster, more accurate results.

Code Generation: Reduced Latency & Improved Focus

In the code generation example, these benefits are achieved as follows:

- Reduced Latency: Multiple LLMs are prompted to generate different code snippets for the same problem simultaneously. This means that instead of waiting for one model to try several times to get it right, you receive a variety of potential solutions in a fraction of the time. The final model then quickly selects or synthesizes the best one.

- Improved Focus: Each LLM can be given a slightly different prompt or "persona" to focus on a specific aspect of the code, such as generating code for a particular language, focusing on efficiency, or prioritizing readability. This specialization helps each model concentrate on a specific sub-problem.

If this were done sequentially, a single LLM would have to generate one code snippet at a time, followed by an execution step, analysis, and then another generation attempt if needed. This would be a slow, iterative process, taking much longer to find the optimal solution.

LLM-as-a-Judge: Overcoming Pitfalls

Relying on a single LLM to act as a judge has potential pitfalls, primarily bias, inconsistency, and a limited perspective. A single model might favor a certain style, logical structure, or even get "stuck" on a particular point, leading to a subjective and potentially flawed evaluation.

Parallelizing this task makes the evaluation more reliable by introducing a form of jury-based consensus. You can use multiple LLMs, each with a different prompt, to evaluate a response from different angles. For example:

- LLM 1 judges factual accuracy.

- LLM 2 judges logical consistency.

- LLM 3 judges the tone and style.

By aggregating these independent evaluations, the final verdict is more robust and comprehensive, mitigating the biases of any single model. It's like having a team of experts, each reviewing a different aspect of the same document, rather than relying on one person's sole opinion.

A New Application: Detailed Trip Planning

A new complex task that could be made more efficient and accurate using the Parallelization design pattern is detailed, personalized trip planning. A single LLM attempting to create a full itinerary might struggle to integrate all the necessary details and constraints (e.g., budget, interests, travel times, and real-time availability).

The independent subtasks would be:

- Subtask 1 (Budgeting): An LLM focuses on finding flights and accommodations within the specified budget.

- Subtask 2 (Activities): Another LLM researches and suggests activities based on the user's interests (e.g., museums, outdoor activities, dining).

- Subtask 3 (Logistics): A third LLM calculates optimal travel times between locations and suggests transportation options.

- Subtask 4 (Customization): A final LLM or the main aggregator combines all the outputs and formats them into a single, cohesive, day-by-day itinerary, ensuring it all fits together logically and seamlessly.