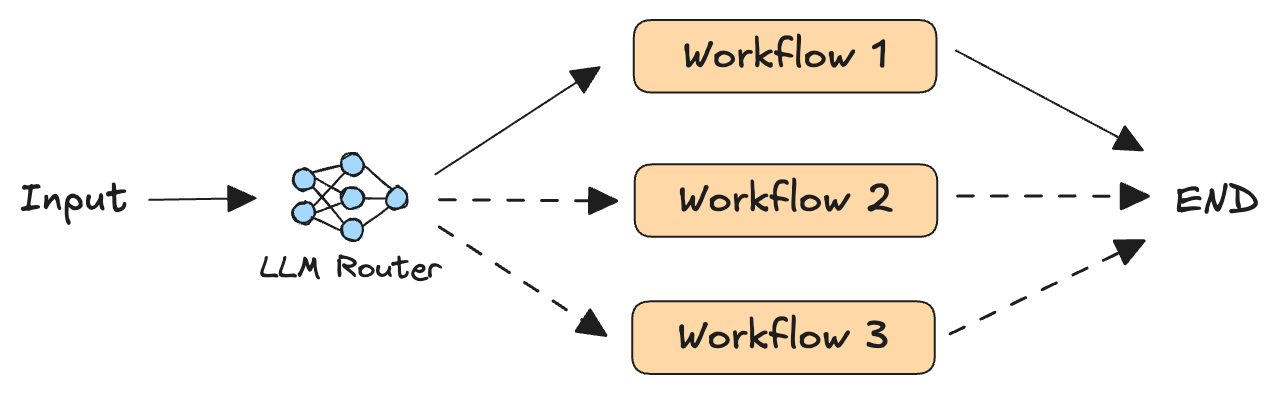

LLM as router

The LLM as Router is a software design pattern where a Large Language Model (LLM) is used as a "smart switch" to categorize incoming user queries and route them to one of several specialized workflows. The central argument is that this approach provides a more flexible, scalable, and cost-effective way to handle diverse and complex requests than relying on a single, monolithic LLM. By intelligently directing queries to the most appropriate backend process, this pattern optimizes for performance, resource efficiency, and accuracy.

Key Terms & Definitions

- LLM as Router: A design pattern where a central LLM acts as an intelligent classifier to direct user queries to different downstream processes.

- Specialized Workflows: A set of distinct, pre-defined tasks or services. These can include:

- Different LLMs: Using a combination of small, fast models and large, powerful ones.

- Fine-tuned Models: Models specifically trained for a particular domain (e.g., medical, legal).

- Microservices and APIs: External tools that perform specific functions, like making a database query or fetching real-time data.

- Routing: The process of a router LLM analyzing an input query and deciding which specialized workflow is the most suitable for generating a response.

- Cost-Efficient Routing: A key advantage of this pattern, where simple queries are routed to cheaper, smaller models, while complex queries are reserved for more expensive, powerful models.

- Semantic Routing: An advanced form of routing where the LLM routes based on the meaning or "intent" of a query, rather than just keywords.

When to use

This pattern works well when incoming requests span diverse topics and complexity levels, provided they can be reliably categorized based on their content. The main goal is to ensure accurate responses despite the broad scope of the application's inputs.

Use cases

- Customer service: Based on the nature of the request, route customer service requests to downstream processes for different departments.

- Resource-efficient routing: Route simple questions to smaller and/or cheaper LLMs and complex questions to reasoning models.

How LLM as Router Addresses Quality vs. Cost

The "LLM as Router" design pattern addresses the inherent trade-off between the quality and cost of an LLM-powered application by intelligently directing queries. It routes simple, low-stakes questions to smaller, cheaper, and faster models, which are sufficient for basic tasks. Simultaneously, it reserves larger, more expensive, and more capable models for complex queries that require advanced reasoning. This selective routing ensures that the application maintains high-quality responses for challenging tasks without incurring the high cost of using a powerful model for every single interaction. This resource-efficient approach provides a scalable and economically viable solution.

Smart vs. Traditional Switches

A traditional, rule-based switch operates on a static set of pre-defined rules (e.g., if a query contains the keyword "billing," route to the billing department). A "smart switch," powered by an LLM, is different because it uses semantic understanding and probabilistic reasoning to categorize queries. Instead of relying on rigid keywords, it can infer the intent of a user's request.

Advantages of a Probabilistic Model:

- Flexibility: It can handle a wide variety of queries, including those with nuanced or ambiguous phrasing that wouldn't match a rule-based system.

- Scalability: It can adapt to new query types without needing a developer to manually add new rules.

Disadvantages of a Probabilistic Model:

- Inaccuracy: There's a chance the model will misclassify a query, leading to incorrect routing.

- Lack of Determinism: The same query might not always be routed in the same way, which can make debugging and auditing difficult.

Challenges and Mitigating Risks

A major challenge of the "LLM as Router" pattern is incorrect categorization, where the router LLM misinterprets a query and sends it to the wrong specialized workflow. For example, a query about a "payment plan" might be mistakenly routed to a "product support" team instead of the "billing" team. This leads to a delayed and inaccurate response, frustrating the user.

To mitigate this risk, you can employ several strategies:

- Confidence Scoring: Program the router to provide a confidence score for its routing decision. If the score is low, the system can ask for clarification or revert to a fallback workflow, like routing to a human agent.

- Chained Fallback: Set up a sequential chain where a failed or low-confidence routing attempt triggers a more general-purpose model or a final human review.

- Human-in-the-Loop: For critical applications, route a small percentage of queries to a human team for review and continuous model improvement. This helps catch errors and provides valuable feedback for future training.

Relation to Multi-Agent Systems

The "LLM as Router" pattern can be seen as a simpler form of a multi-agent system.

- How it's simpler: The router is a single, central agent whose sole purpose is to classify and delegate tasks. It does not perform the tasks itself, nor does it typically communicate with the other "agents" (the specialized workflows) in a back-and-forth manner. It's a one-way street: classify, route, and the task is complete.

- Where it falls short: A full agentic architecture is more complex and dynamic. It involves multiple, distinct agents that can interact with each other, execute tasks, and collaborate to solve a problem. For instance, a multi-agent system might have one agent for research, another for writing, and a third for editing, all working together and communicating to produce a final document. The LLM as Router lacks this collaborative, autonomous, and conversational complexity.